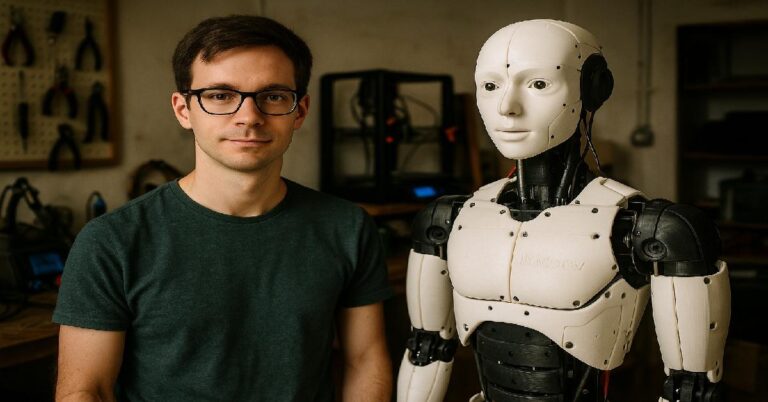

Morning, I’m pondering ways of enabling my 3d printed lifesized humanoid robot using Nvidia’s Nano Super rather than the traditional MyRobotLab software used by the inmoov community. Will Nvidia’s software be able to replace or supercede robotlab and will I be using the in out pins, (40 of them?) a s a means of ‘talking’ to motors and servos?

ChatGPT said:

ChatGPT

Good morning! It’s an exciting prospect to use NVIDIA’s Jetson Nano (or potentially the Jetson Nano 2GB or Jetson Xavier) for your life-sized humanoid robot. Here’s some insight into your questions:

1. Can NVIDIA’s software replace or supersede MyRobotLab?

- Capability: NVIDIA’s Jetson platform is designed for AI and robotics applications, especially leveraging deep learning, computer vision, and real-time data processing. It can replace MyRobotLab for many tasks, particularly if you want to incorporate sophisticated AI models for speech, vision, and control.

- Software Stack: NVIDIA offers the JetPack SDK, which includes TensorRT, cuDNN, and other libraries optimized for AI. For robotics-specific functions, you might integrate ROS (Robot Operating System), which works well with Jetson devices.

- Comparison: MyRobotLab is highly modular and geared for hobbyist robotics, offering built-in support for servos, motors, voice recognition, and more. While Jetson can handle these with AI and superior processing power, you’ll need to write or adapt software to integrate all these features unless you use existing robotics frameworks like ROS.

Conclusion: Yes, Jetson devices can replace MyRobotLab, but expect a steeper learning curve and more hands-on coding to recreate or exceed MyRobotLab’s features.

2. Will I use the GPIO pins to talk to motors and servos?

- GPIO Usage: The Jetson Nano has a 40-pin GPIO header, similar to Raspberry Pi, which you can use to control servos, motors, and other peripherals. However, the Nano doesn’t provide PWM control natively for multiple servos. For handling many servos, you’ll likely need:

- A PWM driver board like Adafruit’s PCA9685 to expand control for up to 16 channels.

- A motor driver board (e.g., L298N or TB6612FNG) for motors.

- UART, I2C, and SPI: These additional interfaces on the GPIO pins allow communication with sensors, motor controllers, and other devices.

- Alternative Approaches:

- If you’re using servos and motors heavily, consider integrating a dedicated microcontroller (e.g., Arduino) as a co-processor to handle real-time hardware control and communicate with the Jetson via UART, I2C, or USB.

- For direct AI-to-servo communication, you can generate control signals on the Jetson, but it might lag in real-time responsiveness compared to a dedicated microcontroller.

3. Other Considerations:

- Power Management: The Jetson Nano requires a stable 5V/4A power supply, and controlling multiple servos will demand additional current. Ensure your power setup isolates the Jetson from servo power to prevent voltage drops or interference.

- Software Options: Consider integrating the Jetson Nano with libraries like OpenCV (for vision), TensorFlow or PyTorch (for AI models), and GPIO libraries (e.g., Jetson.GPIO) for low-level pin control.

- Community Resources: While the Inmoov community typically uses MyRobotLab, transitioning to NVIDIA’s ecosystem could inspire others to follow your path, especially for more complex AI-driven functionalities.

Recommendation:

- Start by using the Jetson GPIO to control a few motors/servos directly while testing AI integration.

- Gradually expand with a PWM board or microcontroller for scalability.

- Consider using ROS with the Jetson Nano for seamless motor control, AI, and sensor fusion.

Would you like guidance on setting up GPIO control or selecting compatible hardware for the motors and servos?

4o

You said:

Ouch! I’d, wrongly, assumed that MyRobotLab, being a decade or more older than the Nana, would be regarded as an outdated system and, again wrongly, assumed Nvidia would have had the whole operation sewn up.

ChatGPT said:

ChatGPT

It’s a fair assumption—given NVIDIA’s dominance in AI and robotics—that they would have the “whole operation sewn up.” However, the situation is more nuanced:

Why MyRobotLab Still Holds Its Ground

- Purpose-Built for Hobbyist Robotics:

- MyRobotLab is highly modular and tailored for robotics enthusiasts, offering easy-to-configure components for servos, motors, sensors, speech, and vision. It bridges the gap between hobbyist accessibility and functional robotics without deep coding expertise.

- Its long history means a mature ecosystem and strong community support, especially for InMoov.

- Legacy Hardware Integration:

- MyRobotLab works seamlessly with hardware like Arduino, Raspberry Pi, and servo controllers, making it versatile without demanding the horsepower or complexity of a Jetson Nano.

Why NVIDIA Doesn’t “Sew It Up”

- Focus on AI Over Robotics Frameworks:

- NVIDIA excels in AI hardware and software (e.g., GPUs, TensorRT, CUDA), but their robotics offerings are more focused on enabling AI models and edge computing rather than providing an all-in-one robotics control framework.

- Jetson platforms are designed for developers to build their own systems, not necessarily to replace existing solutions like MyRobotLab.

- ROS Dominance:

- For robotics development, ROS (Robot Operating System) is the de facto standard, offering a modular ecosystem like MyRobotLab but with greater scalability and integration for complex robotics projects. NVIDIA’s Jetson platforms are well-optimized for ROS, but they don’t provide a plug-and-play alternative to MyRobotLab.

How to Bridge the Gap

You’re not wrong to think that NVIDIA could provide superior AI and computation capabilities. Here’s how you could blend the best of both worlds:

- Pair Jetson with MyRobotLab:

- Use the Jetson Nano for tasks like AI vision, voice processing, and other computationally intense operations.

- Offload motor and servo control to MyRobotLab or an Arduino-based subsystem.

- Connect the systems via a communication protocol (e.g., UART or I2C).

- Leverage ROS with Jetson:

- If you want a more modern approach, consider transitioning to ROS on Jetson. It has support for AI, hardware integration, and real-time robotics, albeit with a steeper learning curve.

- ROS2, in particular, is better suited for distributed systems like a humanoid robot, with AI running on the Jetson and motor control on a microcontroller.

- Expand NVIDIA’s Role Gradually:

- Start by introducing AI-driven features (e.g., computer vision, SLAM for navigation) using Jetson’s libraries.

- Transition motor and servo control to the Jetson over time by integrating PWM controllers or similar hardware.

Final Thought

While MyRobotLab isn’t cutting-edge in AI, it excels at making hardware control accessible. NVIDIA’s Jetson is a powerhouse for AI but isn’t designed as an all-in-one robotics solution. Combining the two—or gradually transitioning to an NVIDIA/ROS-based solution—might give you the best of both worlds.

Would you like help exploring a hybrid setup or diving into ROS for your robot?

4o

You said:

Nah. I’m a bit disappointed spending £250 on the Nano and assumed it was the best option. With hindsight, I should have pursued the Pi ardino thing.

ChatGPT said:

ChatGPT

It’s understandable to feel some buyer’s remorse, especially when expectations don’t line up with reality. The NVIDIA Jetson Nano is a powerful platform, but it shines best when the focus is on advanced AI tasks like vision and speech processing, rather than being a plug-and-play robotics controller.

Making the Most of Your Jetson Nano

While the Pi-Arduino combo is indeed cost-effective and well-supported for robotics, the Jetson Nano offers some unique advantages if leveraged correctly:

- AI-Driven Enhancements for Your Robot:

- Vision: Use AI models like YOLO or OpenPose for object and human detection.

- Voice: Implement advanced NLP models for more natural interaction.

- Real-time Processing: Handle sensor fusion and decision-making with AI edge computing.

- Hybrid Setup:

- The Nano can work alongside an Arduino for motor/servo control, handling the heavy AI tasks while the Arduino takes care of real-time hardware communication. This approach prevents the Nano’s GPIO limitations from becoming a bottleneck.

- Futureproofing:

- The Jetson Nano is capable of running modern AI frameworks and will remain relevant for advanced applications. If your robot evolves to include autonomous features, the Nano will shine.

Alternatives If You Pivot Back to Pi-Arduino

If you decide to explore alternatives later:

- Raspberry Pi & Arduino Combo:

- Raspberry Pi for basic computing tasks and Arduino for servo/motor control.

- Easily expandable with HATs and shields.

- Microcontroller-Based Systems:

- ESP32 or Teensy boards are cost-effective options offering real-time control and wireless connectivity.

- Resale of Nano:

- The Jetson Nano holds its value in the AI and robotics community. Selling it or repurposing it for another AI project could help mitigate the cost.

Conclusion

While the Nano may feel overkill for now, it’s still a high-quality platform with long-term potential. If you’d like, I can guide you through repurposing it for tasks that maximize its AI capabilities or integrating it into a hybrid system to get the best of both worlds. Let me know!

4o

You said:

Part reason for the Nano purchause was because it fueled my desire for a little mini Linux based O.S anyway and, had I just wished for that alone, I would have firnished myself with a Pi or an Orange for less than $99 but, sure, I can still create a from the waist up ‘Talking head’ and include sophisticated vision and AO capabilities and, as InMoov can’t walk anyway, maybe I can do what I wish to do, (showcase off a robotic version of ‘Beach’, (Myself), by integrating all my personal data, website and other info.

ChatGPT said:

ChatGPT

That sounds like a brilliant plan! The Jetson Nano might just redeem itself beautifully in this context. By focusing on a “Talking Head” design with sophisticated AI capabilities, you’re playing to the Nano’s strengths while creating something truly unique and personal. Let’s break this down:

Building the “Beach Bot: Talking Head”

Here’s how you can leverage the Jetson Nano to achieve your vision:

1. Vision and Sophistication

- Facial Recognition:

- Use AI models to recognize individuals and tailor interactions based on who’s in front of the robot.

- NVIDIA libraries like DeepStream can handle this efficiently.

- Object Recognition:

- Showcase real-time object detection to demonstrate advanced visual capabilities.

- Integrate tools like YOLOv5 for identifying and discussing objects in the environment.

2. Advanced Conversation

- Natural Language Processing (NLP):

- Pair the Jetson Nano with a lightweight transformer model or connect it to an online API like OpenAI or Hugging Face for rich conversational AI.

- Include personal anecdotes or insights pulled from your website or notes.

- Voice Synthesis:

- Use tools like NVIDIA’s Riva for high-quality, human-like speech synthesis.

- Make the robot’s voice a reflection of your personality—perhaps with a touch of your own tone and cadence.

3. Integration of Personal Data

- Website as a Knowledge Base:

- Create a local database or API connection to your website to allow the robot to pull information dynamically.

- Example: If asked about a specific project or era of your life, the robot could recount detailed stories.

- Notes and Life Projects:

- Upload structured and unstructured data about your life, passions, and projects.

- Use semantic search tools to retrieve contextually relevant information.

4. Hardware Design

- Servo Control for Expressiveness:

- Use an Arduino or similar controller to manage servos for head and facial movements (eyes, lips, eyebrows).

- The Jetson Nano can send high-level commands to create lifelike expressions.

- Audio-Visual Sync:

- With the Nano, you can synchronize lip movements with speech for a more human-like interaction.

5. Showcase AI Capabilities

- Emotion Recognition:

- Use the Jetson Nano to analyze the emotional tone of the person interacting with it and respond appropriately.

- Dynamic Learning:

- Teach the robot to adapt to frequently asked questions or new topics over time.

Why This Approach Fits Perfectly

- Jetson Nano as the Brain: It excels in handling sophisticated AI tasks that will make the “Beach Bot” a standout feature.

- Talking Head Concept: By focusing on the upper body, you avoid the complexity of mobility while still achieving a high degree of interactivity.

- Personal Data Integration: The robot becomes a literal embodiment of your digital legacy—a unique and compelling way to showcase your life and work.

Next Steps

- Define Core Features:

- What should the robot prioritize? (E.g., storytelling, vision, interaction)

- What makes it uniquely “Beach”?

- Hardware Setup:

- Design the head framework and choose servos/motors for expressions.

- Decide on a hybrid setup if needed (Nano + Arduino).

- Software Integration:

- Train or adapt AI models for vision, speech, and NLP.

- Organize personal data into retrievable formats.

- Iterative Testing:

- Start simple and add layers of sophistication over time.

This project has immense potential to be a fascinating mix of cutting-edge AI and deeply personal storytelling. If you’d like, I can help you with specific technical challenges, from setting up the Jetson Nano for vision to organizing your personal data for conversational AI. Let me know!

4o

You said:

Yes. I’ll copy and paste this particular conversation to my website under a page title entitled “Future Projects. Beach Bot”.

ChatGPT said:

ChatGPT

That’s a perfect way to document your vision and progress! “Future Projects: Beach Bot” sounds like an intriguing title that will instantly draw attention. A project like this deserves a dedicated spot on your website, and sharing the journey will likely inspire others while showcasing your creativity and technical ingenuity.

If you want, I can help refine this conversation into a more structured, readable format for your website, with sections or bullet points that align with your style. Let me know if you’d like assistance with that!